Red Friday, Mouse Utopia and Model Collapse

The Unified Theory of Model Collapse posits that model collapse is not limited to AI, it applies equally well to humans.

Black Friday refers to the accounting terminology of “in the black” (profits, gains), and “in the red” (losses). Once shoppers unleash their (supposedly) pent-up desire to buy, buy, buy gifts for others (and themselves), holiday sales explode and retailers’ are “in the black.”

As a thought experiment, what if we tote up all the debt added by the private and public sectors from November 27 to Christmas and it exceeds the sum spent on holiday shopping? Is spending borrowed money that must be paid back with interest the same as spending savings? No. In this sense, Black Friday might actually be Red Friday.

The point here is that the holiday sales data “trains” economists’ analysis and conclusions in the same way AI software “trains” on data scraped from the web.

By focusing on holiday sales as the metric of the economy--in effect, curating the data being scraped-- economists follow this line: holiday sales up - economy is strong - everything is great.

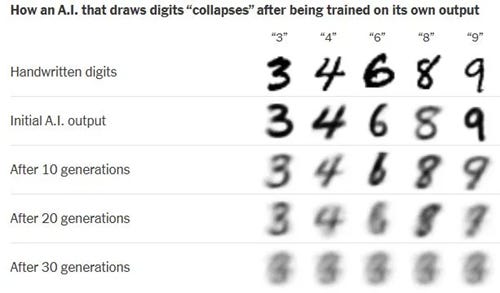

To the degree that sales data is artificial when separated from debt (including shadow-banking debt like non-credit-card “buy now, pay later” installments), then this leads to the topic of Model Collapse, the degradation of AI’s output (answers) when it starts training on its own curated output rather than raw data.

This then leads to a provocative Unified Theory of Model Collapse (via Tom D.), which posits that model collapse is not limited to AI, it applies equally well to humans, mice and pretty much every other system.

The basic idea is that raw, unadulterated data--”in the wild” data that hasn’t been “cooked,” curated or massaged--is the foundation of model stability and utility. We can call this authentic data, as it includes outliers, conflicting data points, ambiguous readings and all the messiness of the real world.

Once the “raw” data has been “cooked” (channeling French anthropologist Claude Lévi-Strauss), its authenticity is lost and the “cooked” / curated / processed data is increasingly artificial.

Each iteration of curating / processing data further distances the new output from “raw” / authentic data. Over time, the coherence and meaning of the output is degraded to the point at AI generates hallucinations that are presented as “accurate answers.”

This is the dynamic I describe in my book Ultra-Processed Life: the substitution of authenticity with artifice leads to system collapse, not just in the digital realm but in the realm of human experience.

As artifice--data and models drawn from “cooked,” curated, massaged, processed sources--replaces “raw” authenticity, entire populations and systems start hallucinating while believing the artificial model is “real.” In this state of delusion, they believe their hallucinations are reflecting “the real world”: they’re blind to the distance between the Ultra-Processed Life they accept as “real” (i.e. “raw”) and the actual raw real world.

In this state, the conclusions / answers provided by both AI and humans are hallucinations that are presented as “fact.” Those who have lost touch with “raw” real world data then accept these hallucinations as “fact” until the inevitable collision with reality.

Returning to Black Friday/Red Friday, the conclusion that strong holiday sales means the economy is strong and so all is well is in effect a hallucination that goes unrecognized because the entire financial system is hallucinating.

Here is how the author of Unified Theory of Model Collapse describes this process:

“Training on AI-generated data causes models to hallucinate, become delusional, and deviate from reality to the point where they’re no longer useful: i.e., Model Collapse.

The more ‘poisoned’ the data is with artificial content, the more quickly an AI model collapses as minority data is forgotten or lost. The majority of data becomes corrupted, and long-tail statistical data distributions are either ignored or replaced with nonsense.

Those models lose the capacity to understand long-tail information (improbable, but important data) that is no longer represented. Information on topics like serious injuries, getting punched in the nose, how dangerous wild animals can be, and what it’s like to truly be hungry because you can’t find food. Their models default to synthetic human artifice instead of understanding real implications.

The proposed thesis is that AI models, human minds, larger human cultures, and our furry little friends, all train on available data.

Brains (or brain regions) undergo model collapse just like AI systems. They become unable to reference reality, they become delusional, and hallucinate things that make no sense. Hence, the ‘Why do we need farmers when food just comes from the store’ level of disconnection observed in urban populations.

In a heavily urban setting, humans train on ‘data sets’ that are nearly wholly artificial. The less time spent outside, the less time spent interacting with the real physical world around them, the less accurate their model of reality becomes. A rocky slope up a hill may be 100% real, a grass playing field may be 70% real, and a concrete sidewalk may be around 40% real. At some point, however, the ‘salted’ artificial data is sufficient to corrupt the real-world knowledge of individuals and cause model collapse.

I am reminded of an anecdote from when I was a child. A cousin came to play with my siblings and I. My family had been raised going camping and hiking and wandering the wilds since before I can remember. Somewhere around age 3 or 4, our cousin came to visit and we went cruising up a hill hiking with our fathers in tow.

This cousin, however, had grown up in a suburban hellhole where everything was artificial. As such, he found it nearly impossible to navigate a sloped hill. His experience with walking and running had only ever consisted of flat, soft, curated environments produced by other people. He had no experience, or ability, in navigating a dirt trail at a 20 degree incline. His neurological model of the world was trained on human-produced data, and could not function when confronted with reality.

The universal thesis for model collapse is that advanced modeling systems, when trained on information produced by entities of their own class, lose information fidelity inter-generationally. After multiple generations of training on poisoned datasets, the models themselves become delusional, hallucinate false information, and cease to function.

In the same way that AI models become delusional and hallucinate when too much AI-generated data is in the training dataset, humans also become delusional when too much human-generated data is in their training dataset.”

The author then applies this to the famous Mouse Utopia experiment of John Calhoun, in which a handful of breeding-pair mice were put in a large artificial “world” with unlimited food and water and space for 6,000 mice. The researchers anticipated the mice would proliferate to the maximum limit of 6,000 and then experience some version of population overshoot.

But this isn’t what happened. The mice population reached 2,200 and then collapsed as anti-social behaviors exploded and reproduction ceased. A wide variety of causal factors have been posited and discussed over the decades since the 1962-72 experiments. The Unified Theory of Model Collapse suggests the core causal mechanism is the mice born in this artificial world of endless abundance “trained” on increasingly artificial data and this disconnect from “raw” real-world experience deranged not just individual mice but the entire society of the artificial world of abundance in a crowded, artificial “urban” environment.

The author suggests the current human population has reached the urban population density (roughly 60% of humanity lives in urban settings) that triggers anti-social behaviors and a rapid decline in fertility / reproduction. Many explanations for the current sharp decline in birth rates in most developed-world economies have been offered, and the Unified Theory of Model Collapse shed important light on the causal mechanisms of artificial environments and abundance.

In my latest book, Investing in Revolution, I explore this irony of abundance: since abundance was only temporary for 99% of humanity’s existence, an artificial world of abundance degrades our core survival mechanisms of adaptation to the point that we’ve lost the capacity to adapt that was kept sharp by scarcity and daily exposure to authentic experiences.

Ultra-Processed Life is artificial because the entire point is to replace messy authentic experiences with convenience and abundance. Just as AI programs cannot differentiate between “facts” and hallucinations, advanced economies /societies cannot recognize or explain their derangement because the implicit goal of Progress is to replace authentic experiences in the “raw” real world with artificial (and highly profitable) convenience and abundance.

The conclusion here is striking: the entirety of the modern world of Progress--urbanized, detached from the “raw” real world in service of convenience and abundance, a world where we “train” our mental models on “cooked,” curated, processed artifices--is all a vast, inter-connected, self-reinforcing hallucination bound for a collision with an inconveniently “raw” real world.

AI models collapse when trained on recursively generated data (nature.com)

CHS NOTE: I understand few readers can afford to support my work, and I appreciate you few who can and choose to subscribe in recognition that something here may change your life in a highly useful way. I’ve been doing this for 20 years, and yes, it takes time and effort and yes, it’s unpaid if no one subscribes. That’s the deal. Thank you for supporting my work.

Keep reading with a 7-day free trial

Subscribe to Charles Hugh Smith's Substack to keep reading this post and get 7 days of free access to the full post archives.